Drivers Panel Blender Magazine

This tutorial was written by the amazing and appeared in Set extensions are the kind of visual effect that every single movie released today has. Not just the big blockbusters, but comedies, little indie dramas and even just about every TV show has some type of set extension or background enhancement happening in it.

It can be anything from alien landscapes and futuristic buildings to simply replacing a traffic sign or a storefront. Usually the same techniques are also useful if you want to remove something from a scene, like a wayward crew member or a car driving through the background of your medieval village. While set extension tasks can be accomplished using both 2D and 3D techniques, in this tutorial we’re going to focus on 2.5D to add an important exterior location to our shot. Perhaps the actual location was a protected park, and we were not allowed to build any kinds of structure out there during shooting. If the interior scenes are all shot on a soundstage or another location, then we only need a couple of establishing exterior shots, which is where matte painting and set extensions can save the day. And if you’ve not heard of 2.5D, it is exactly what it sounds like – a blend of 2D and 3D.

We’ll use 2D cards with matte paintings on them and position them around our 3D space. We are going to utilise Blender’s 3D space for much of this project, but keep in mind that the work we are doing is set up for compositing, not a 3D render. Matte painting, which isn’t the scope of this tutorial, goes hand in hand with set extensions.

Matte paintings are usually given to a compositor in layers so the compositor can make adjustments to (or completely remove) each individual part of the painting and layer them into the matchmoved scene as needed. These layers can range from very simple (as in our case with this tutorial) to very complex.

Do not rule out 3D completely, though. Naturally, you are welcome to create any 3D elements you want. Having the matchmove will make it relatively easy to drop things into place, but be sure to take great care when texturing and lighting. Look at light and shadow placements in the footage, and the overall tonality of the shot, and do your best to get your 3D in the same visual world. Step 01 – 3D track the scene Bring the footage into the Movie Clip Editor (MCE), then Set Scene Frames and Prefetch so it’s loaded into memory.

This is a simple shot, so there should be no problems finding at least eight points to track. Try and select points that are both close and far from the camera. I tracked 16 different points, finding contrast areas on the hillside and the pathway. In this project I tried to stay away from the very edges of the image. Step 02 – Solve the camera motion After choosing Solve keyframes and setting Refine to Focal Length, K1, K2, my 16 points generated an error of 0.3. In the Scene Setup tab, press Set as Background, so Blender automatically places the frame sequence in your camera view, then click Setup Tracking Scene. There are some buttons in the MCE editor for aligning the orientation, but I usually find it just as easy to select the camera, set the pivot to the 3D cursor (at centre), and rotate in the various views until it is aligned properly to the world.

Step 03 – Zero-weighted tracks Having only 16 points makes it tough to get an idea of the geometry of the scene, so let’s use zero-weighted tracks to create many more. In the MCE, under the Track Settings tab, open Extra Settings and change the weight to 0. Go to the first frame and use Detect Features. Press F6 to bring up the options and change the threshold and margin until you have tons of tracks all over the shot. Track forward, then do the same from the last frame, tracking backwards.

In the MCE graph editor, select the most erroneous curves and delete them. Step 04 – Create rough geometry Solve the camera again, and your 3D scene should now look like a point cloud of the landscape. In the Geometry tab, press 3D Markers To Mesh. In the 3D view, you’ll see a vertex created for every track point. Turn off the track points by unchecking Motion Tracking in the Properties panel. Create geometry from the new vertices by going into Edit Mode, selecting four vertices, and pressing ‘F’ to create a face. Keep building new faces off that one, choosing vertices that seem to make sense for the hillside.

Step 05 – Bring in matte paintings In FileUser PreferencesAdd-Ons, turn on Import Images as Planes (which you’ll now find under Shift+AMesh and begin importing your matte painting elements. In this case, we’re using PNG images with alpha channels. When importing, be sure to use an Emission material and Use Alpha – Premultiplied. Place and scale them as necessary.

Be sure to place the planes near vertices that lie in 3D space where that matte painted element should actually be. Step 06 – Spice up the sky The sky was clear on the day of shooting, but we can add some drama by replacing it or adding clouds to it. Import a sky image as a plane and make it very large. Push it back away from the camera, beyond the farthest track point. We don’t need a real sky dome because all the elements have light painted in to them from the matte painting department. This sky will be just another layer we composite in. Step 07 – Arrange the layers We have to split these paintings into the correct layers for compositing.

For this particular scene, we could leave the hut and the bridge on the same render layer, but if we want to colour correct only one of them later, it will be easier if we just place everything on its own layer. Once you have split objects onto their own layers by pressing ‘M’, you then have to set up the corresponding render layers in the Render Layers panel. Place the rough landscape geometry on a layer that won’t render. Step 08 – Rotoscope foreground objects Obviously we’ve got to bring the actor and some of the pathway back over the matte paintings. Back in the MCE Tracking Properties, turn off the checkboxes for Pattern and Disabled, to keep the view less cluttered. Press Tab to switch into Mask mode and Cmd/Ctrl-left click to begin drawing your first mask around the actor’s hair, pressing ‘I’ to set keyframes.

As you create the masks, be sure to label your mask layers clearly. I’m creating one mask for the actor and one for the pathway in front of the bridge. And don’t forget you can parent masks to tracks! Step 09 – Moving to the compositor Once in the compositor, Enable Nodes should already be activated because setting up the tracking scene creates a basic node layout for render layers and shadow passes.

Blender 3d Magazine

Delete whatever you won’t need, duplicate the Render Layers node for every layer you set up in Step 7 and layer them so the sky is first (using a Mix node set to Screen), followed by the bridge, hut, and hut shadow (all using Alpha Over nodes). Create Mask nodes for your rotoscoping, combine them with a Math node set to Add (enable Clamp), and plug it into the factor of the Alpha Over node for the bridge. Step 10 – Adjust the alpha channel You’ll have to invert the matte on the bridge, and add a Blur to each mask before they are combined. You could also use a Dilate/Erode node if your roto isn’t accurate enough once blurred.

For the sky, run a Separate RGBA node off the Movie Clip node and plug a Color Ramp into the blue channel. Experiment with adjusting the sliders until you get a nice matte for the sky, then subtract the actor’s hair roto with a Math node. In order to get the perfect clouds, you’ll need to plug that Math node into the sky Mix factor.

Step 11 – Add 3D element Now let’s dip into real 3D to bring in some added realistic details. I chose to make a bird flying in the background, which I rendered from a separate project file to use as an alpha channel on a 2D card in our main scene. Other fun details could be a clothes line hanging outside with fabric blowing in the breeze, or maybe some added trees or bushes.

You could even shoot an actor against a greenscreen and place that footage on a 2D card out there in front of the hut, if you liked. Step 12 – Finalise the shot Now that all the elements are there and layered correctly, feel free to dial things in to your liking. Maybe add some light wrap to the hut, and make sure the colour correction is working on all the individual elements. Check your black levels and brightness levels by making the composite overly bright or overly dark temporarily (throw a Color Correction node at the end of the node tree and crank up the Gamma). If you’re using footage from a camera that has noticeable film grain, you’ll have to add matching grain to your matte painted elements.

Made in Blender (clockwise from top left) The Blender Foundation’s ‘open movie’ Sintel; Red Cartel’s animated TV series Kajimba; indie VFX movie Project London; Red Cartel’s print illustration work for P&O; and animation for Dutch TV show Klaas Vaak Blender may be free, but it isn’t just a tool for hobbyists. The powerful open-source 3D package is now used on a variety of professional projects, from the Blender Foundation’s own ‘open movies’ to illustrations, animated commercials and even visual effects work. While the fundamentals of Blender are well covered by training materials available online, there is little information targeted specifically towards this new group of professional users. To help you get the most from the software, we asked five of the world’s 8leading Blender artists to provide their tips for working quicker and smarter under real-world production conditions. Don’t duplicate: instance instead. Proportional editing can create an organic feel to a scene, for example when placing plants or rocks Say you have a scene containing hundreds of individual objects: for example, rocks or plant geometry. To position each of them manually would take forever – so to speed up the process, use the Proportional Edit tool.

Select a single object and press O to turn on Proportional editing. Now press G, S or R to respectively move, scale or rotate while simultaneously rolling the mouse wheel. You’ll see that all of the objects in the Proportional editing region (shown by a white circle) are affected. The mouse wheel changes the size of the region. Proportional Edit can be set to many different Falloff types (shown by a rollout near the blue circle Proportional Edit button). Selecting Random will cause random translation, rotation and scaling of objects within the soft-selection region – useful for ‘messing up’ a scene to make it feel more organic. Since this trick works across all visible scene layers, put any objects that you don’t want to affect into a separate layer, then simply turn that layer off.

James Neale, founding partner of 04. Use Pose Libraries for blocking. Setting up libraries of standard facial expressions speeds up your first lip sync pass Pose Libraries are a great way to rough in animation, particularly for facial animation and lip sync.

This is especially useful if your rig uses bones and drivers rather than exclusively relying on shape keys for phoneme shapes. I like to make a bone group for my lip sync controls and use those controls to create my phonemes. Each phoneme gets saved as a pose in my character’s Pose Library (Shift+L). When animating, select the bones in the lip sync bone group and press Ctrl+L to enter a library preview mode. You can then use your mouse’s scroll wheel or Page Up/Page Down to cycle through the poses in your library. Choose your pose and insert your keyframes.

Drivers Panel Blender Magazines

This works as your first rough pass on the lip sync to get the timing right. On subsequent passes, you’re free to adjust the facial controls to add more personality to your animation. And because a Pose Library is just a special kind of action, you can easily append it to any scene.

Jason van Gumster, owner of 05. Use Network Render to set up an ad hoc renderfarm. The Client machine automatically receives the rendered frames from network renders Start by switching the render engine from Blender Render to Network Render. On your master node, choose Master from the Network Settings panel of Render Properties. When you click Start Service, you can view the status of the farm by opening a web browser on that machine and pointing it to With the master node running, go to the other machines and set them up as slaves. It’s the same steps as for the master node: just choose Slave from Network Settings instead of Master. Assuming the machines are on the same network, when you click Start Service, the slave node should automatically find the master.

To render, go to the machine you want to render from and set up your client by switching to Network Render and choosing Client from Network Settings. If you click the refresh button, the client should automatically find the master node. Now you can render your animation on your ad hoc farm by clicking the Animation on network button in the Job Settings panel. Use Damped Track for eye tracking. Damped Track gives better results than the Track To constraint when animating eyes. Note how the character’s eyes now point at the target Blender’s Track To constraint is handy for making objects or bones point at a target.

Unfortunately, it is also based on gimbal (or Euler) rotations, which can make it behave oddly. Sometimes that behaviour is what you want (for turrets, for example) – but usually, it’s not (on eyes, for example): what you would like is for the object to take the most direct rotation path from its starting rotation to point at the target. Fortunately, Blender has a constraint that does exactly that: it’s called Damped Track. In most cases, substituting Damped Track for Track To will give you the result you want.

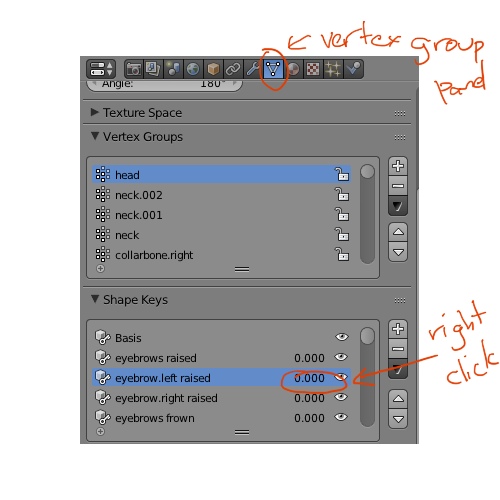

Use sculpting to fix errors in animation. Blender’s sculpting tools can be used to control the silhouette of a character over the course of an animation: easy to draw, but hard to do with bones! One of the coolest uses for the sculpt tool was shown to me by animator and teacher Daniel Martinez Lara. Instead of just sculpting static objects, you can use it to tweak the shape of characters as they move over time in order to polish animations. This enables you to fix deformations, add extra stretching or change the outline of a pose – things that are hard to do with bones, but easy to draw. This only works in the very newest builds of Blender (2.56+). After animation is completed, go to Mesh Properties and locate the Shape Keys panel.

Select the Basic key selected from the list and click the plus icon to add a new shape key. Next, move the playhead to a frame you want to tweak, click the pin icon and enter sculpt mode. For sculpting, I prefer to use the Grab tool for making larger shape changes, and then the Smooth tool to smooth out problem areas. Once you are happy with your changes, exit sculpt mode and play with the shape Value slider.

Hover your cursor over the slider and press the I key to insert keyframes to animate the effect in and out over time., animator on Big Buck Bunny and Sintel 08. Feed Compositor nodes to the VSE via scene strips.

Using scene strips to help work smoothly between the Node Editor and the VSE When using Blender for motion graphics, there’s some cool handshaking you can do between the Node Editor and Video Sequence Editor. If a shot requires more involved effects than the VSE can provide, switch to the Compositing screen layout and create a new empty scene (I like to name the scene after the shot). Use the Image input node to bring your clip into the Node Editor, adjusting the start and end frames in the node and the scene as necessary. From this point, you can add whatever cool compositing effects you want. When you switch back to the Video Editing screen (it should still be in your editing scene), replace your shot by adding a scene strip in the VSE for your compositing scene. As a bonus, if you delete all of the screen layouts except for Compositing and Video Editing, you can quickly bounce between your composite scene and your editing session using Ctrl+left arrow and Ctrl+right arrow. Put colour into shadows and global illumination.

Separate your shadows and GI/AO layers within the compositor to adjust their colours By default in Blender, shadows and GI are black. This doesn’t always give the best result. If you look at Pixar’s work, for example, the shadow is usually a darker, more saturated version of the diffuse colour. You can achieve this effect in the compositor by specifying your layer to output a separate GI and shadow pass. Use the Mix node set to multiply your shadow/GI with a colour of choice, them Mix that back into your render pass for best effect. We sometimes render a scene (Scene01) with no GI or shadows at all, and an identical copy of that same scene (Scene02) to get just the GI and shadows layers by themselves.

Use the compositor back in Scene01 to composite those layers from Scene02, using the colour picker inside the Mix node set to Multiply or Add to achieve the shadow colour we need. Use Only Insert Available when autokeying. Checking the Only Insert Available option means Auto Keyframing only affects scene elements that are already animated Blender, like many 3D applications, has a feature that will automatically insert keyframes when you move an object or bone. Blender calls this feature Auto Keyframing or ‘autokey’. I prefer animating this way because it saves on keystrokes, and because otherwise I sometimes forget to key part of a pose I’ve been working on. The drawback of using autokey is that it also sets keys on things you aren’t intending to animate. For example, if I tweak the position of the camera or lights, and then tweak them again later on at a different frame, this will result in them being animated, even if I don’t want it to be.

Fortunately, Blender offers a way to solve this: Only Insert Available. With this option toggled on, autokey will only set keys on things that are already animated. The first time you key something, you have to do it manually, but from then on the process is automatic.

This lets you treat manual keying as way of telling Blender, “I want this to be animated.” From then on, Blender takes care of the rest with autokey. is a freelance 3D artist 11. Set up master files to grade large projects. Setting up a master file to control the final grade for an entire project minimises time spend testing renders: a trick Red Cartel used on its animated short, Lighthouse Most large animation projects require you to keep track of many individual shots and grade them consistently at the end.

You can use the Blender sequencer and compositor to do this. First, start an empty.blend file.

This will be your master file. Link in every scene you need from the individual.blend shot files and place them in order along the timeline of the sequencer in the master file. (This helps the editor, since the Blender sequencer produces an OpenGL version of each scene, making it easy to see the latest work from each scene in real time.) You can now set the look and feel for each section of the animation. Select a group of shots that must have the same visual properties, and group those nodes together inside the master file, calling the group ‘Master Comp’ or something suitably witty. Go to each of the original individual shot files and link back to the Master Comp group.

Now whenever any of the artists updates their work (for example, to update an asset, the animation, lighting, or scene-specific compositing) they only have to tell the person in charge of the master file to reload their particular scene, and the same global feel will be preserved. Since the master file controls both the edit and the global composite group (the grade), rendering via that master file enables you to render the entire project with the exact edit decision list and composite gamut required. Set up Fake users to manage remote collaboration.

An asset created for in-store merchandising for Southern Comfort. Blender’s library systems help keep track of all the different parts during remote collaborations Red Cartel often collaborates with artists working remotely outside the studio. To keep the pipeline as smooth as possible, we use Blender’s excellent library systems. Data transfer for large scene files takes a long time, so for animators, we ask them to save out their Blender scene with unique names for their character/camera actions. If they then delete all the relevant working geometry and specify their most recent action as having a Fake user (the F button next to that action inside Dopesheet/Action Editor), that datablock is retained inside the empty.blend file. This reduces the file size enormously, making it much quicker to transfer over the internet.

Once uploaded, our local artists simply append or link that data into the latest render scene in order to get the remote artist’s updated animation. We use Dropbox (dropbox.com) heavily for this, and since the master edit/composite file refers to the Dropbox folder for each remote artist, all the latest animations are ‘automagically’ updated in the edit.

Use Rigify for rapid rigging. Rigify speeds up the process of creating a character rig, even weighting it automatically. Rigify is an incredibly useful tool for getting characters rigged in a jiffy. Instead of spending days setting up a rig by hand, adding constraints, scripts and controllers, it makes the entire process a five-minute affair.

Rigify is actually a plug-in, but it’s distributed with the latest releases of Blender. (I’m using version 2.56.) Before you can use it, you need to enable it by selecting File User Preferences Add-Ons and locating Rigify in the list. Click the check mark. Next, add the ‘meta-rig’ (the default initial rig you will use to create your own custom set-up) via Add Armature Human (Meta-Rig).

Position and scale this to match your character. Enter Edit mode, and tweak the proportions further until all the bones line up with your mesh. Remember to use X-Axis Mirror in the Armature tools panel. Go back to Object mode, and locate the Rigify Buttons panel in Armature Properties.

Click Generate to create your final rig and discard the meta-rig. Parent your mesh to your generated rig and select With Automatic Weights in the popup. The last (optional) step is to run a UI script to add a nice interface to the rig. Open the Blender Text Editor, and select rigui.py from the data list. Press Run Script in the header, and look in the info panel N in the 3D view.

You’ll have a nice list of context-sensitive controls for bones.